6 Miscellaneous information on search engines

A D V E R T I S E M E N T

6.1 Google SandBox

At the beginning of 2004, a new and mysterious term appeared among seo

specialists � Google SandBox. This is the name of a new Google spam

filter that excludes new sites from search results. The work of the SandBox

filter results in new sites being absent from search results for virtually any

phrase. This even happens with sites that have high-quality unique content and

which are promoted using legitimate techniques.

The SandBox is currently applied only to the English segment of the Internet;

sites in other languages are not yet affected by this filter. However, this

filter may expand its influence. It is assumed that the aim of the SandBox

filter is to exclude spam sites � indeed, no search spammer will be able to wait

for months until he gets the necessary results. However, many perfectly valid

new sites suffer the consequences. So far, there is no precise information as to

what the SandBox filter actually is. Here are some assumptions based on

practical seo experience:

- SandBox is a filter that is applied to new sites. A new site is put in the

sandbox and is kept there for some time until the search engine starts treating

it as a normal site.

- SandBox is a filter applied to new inbound links to new sites. There is a

fundamental difference between this and the previous assumption: the filter is

not based on the age of the site, but on the age of inbound links to the site.

In other words, Google treats the site normally but it refuses to acknowledge

any inbound links to it unless they have existed for several months. Since such

inbound links are one of the main ranking factors, ignoring inbound links is

equivalent to the site being absent from search results. It is difficult to say

which of these assumptions is true, it is quite possible that they are both

true.

- The site may be kept in the sandbox from 3 months to a year or more. It has

also been noticed that sites are released from the sandbox in batches. This

means that the time sites are kept in the sandbox is not calculated individually

for each site, but for groups of sites. All sites created within a certain time

period are put into the same group and they are eventually all released at the

same time. Thus, individual sites in a group can spend different times in the

sandbox depending where they were in the group capture-release cycle.

Typical indications that your site is in the sandbox include:

- Your site is normally indexed by Google and the search robot regularly

visits it.

- Your site has a PageRank; the search engine knows about and correctly

displays inbound links to your site.

- A search by site address (www.site.com) displays correct results, with the

correct title, snippet (resource description), etc.

- Your site is found by rare and unique word combinations present in the text

of its pages.

- Your site is not displayed in the first thousand results for any other

queries, even for those for which it was initially created. Sometimes, there are

exceptions and the site appears among 500-600 positions for some queries. This

does not change the sandbox situation, of course.

There no practical ways to bypass the Sandbox filter. There have been some

suggestions about how it may be done, but they are no more than suggestions and

are of little use to a regular webmaster. The best course of action is to

continue seo work on the site content and structure and wait patiently until the

sandbox is disabled after which you can expect a dramatic increase in ratings,

up to 400-500 positions.

6.2 Google LocalRank

On February 25, 2003, the Google Company patented a new algorithm for

ranking pages called LocalRank. It is based on the idea that pages should

be ranked not by their global link citations, but by how they are cited among

pages that deal with topics related to the particular query. The LocalRank

algorithm is not used in practice (at least, not in the form it is described in

the patent). However, the patent contains several interesting innovations we

think any seo specialist should know about. Nearly all search engines already

take into account the topics to which referring pages are devoted. It seems that

rather different algorithms are used for the LocalRank algorithm and studying

the patent will allow us to learn general ideas about how it may be implemented.

While reading this section, please bear in mind that it contains theoretical

information rather than practical guidelines.

The following three items comprise the main idea of the LocalRank algorithm:

1. An algorithm is used to select a certain number of documents relevant to

the search query (let it be N). These documents are initially sorted by

some criteria (this may be PageRank, relevance or a group of other criteria).

Let us call the numeric value of this criterion OldScore.

2. Each of the N N selected pages goes through a new ranking procedure

and it gets a new rank. Let us call it LocalScore.

3. The OldScore and LocalScore values for each

page are multiplied, to yield a new value � NewScore. The pages

are finally ranked based on NewScore.

The key procedure in this algorithm is the new ranking procedure, which gives

each page a new LocalScore rank. Let us examine this new procedure in more

detail:

0. An initial ranking algorithm is used to select N pages relevant to

the search query. Each of the N pages is allocated an OldScore

value by this algorithm. The new ranking algorithm only needs to work on these

N selected pages. .

1. While calculating LocalScore for each page, the system selects

those pages from N that have inbound links to this page. Let this number

be M. At the same time, any other pages from the same host (as determined

by IP address) and pages that are mirrors of the given page will be excluded

from M.

2. The set M is divided into subsets Li. These subsets contain

pages grouped according to the following criteria:

- Belonging to one (or similar) hosts. Thus, pages whose first three octets

in their IP addresses are the same will get into one group. This means that

pages whose IP addresses belong to the range xxx.xxx.xxx.0 to

xxx.xxx.xxx.255 will be considered as belonging to one group.

- Pages that have the same or similar content (mirrors)

- Pages on the same site (domain).

3. Each page in each Li subset has rank OldScore. One page with

the largest OldScore rank is taken from each subset, the rest of pages

are excluded from the analysis. Thus, we get some subset of pages K

referring to this page.

4. Pages in the subset K are sorted by the OldScore parameter,

then only the first k pages (k is some predefined number) are left

in the subset K. The rest of the pages are excluded from the analysis.

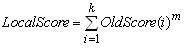

5. LocalScore is calculated in this step. The OldScore

parameters are combined together for the rest of k pages. This can be

shown with the help of the following formula:

Here m is some predefined parameter that may vary from one to

three. Unfortunately, the patent for the algorithm in question does not describe

this parameter in detail.

After LocalScore is calculated for each page from the set N,

NewScore values are calculated and pages are re-sorted according to the new

criteria. The following formula is used to calculate NewScore:

NewScore(i)= (a+LocalScore(i)/MaxLS)*(b+OldScore(i)/MaxOS)

i is the page for which the new rank is calculated.

a and b � are numeric constants (there is no more detailed

information in the patent about these parameters).

MaxLS � is the maximum LocalScore among those calculated.

MaxOS � is the maximum value among OldScore values.

Now let us put the math aside and explain these steps in plain words.

In step 0) pages relevant to the query are selected. Algorithms that do not

take into account the link text are used for this. For example, relevance and

overall link popularity are used. We now have a set of OldScore values.

OldScore is the rating of each page based on relevance, overall link

popularity and other factors.

In step 1) pages with inbound links to the page of interest are selected from

the group obtained in step 0). The group is whittled down by removing mirror and

other sites in steps 2), 3) and 4) so that we are left with a set of genuinely

unique sites that all share a common theme with the page that is under analysis.

By analyzing inbound links from pages in this group (ignoring all other pages on

the Internet), we get the local (thematic) link popularity.

LocalScore values are then calculated in step 5). LocalScore is the

rating of a page among the set of pages that are related by topic. Finally,

pages are rated and ranked using a combination of LocalScore and

OldScore.

6.3 Seo tips, assumptions, observations

This section provides information based on an analysis of various seo

articles, communication between optimization specialists, practical experience

and so on. It is a collection of interesting and useful tips ideas and

suppositions. Do not regard this section as written in stone, but rather as a

collection of information and suggestions for your consideration.

- Outbound links. Publish links to authoritative resources in your subject

field using the necessary keywords. Search engines place a high value on links

to other resources based on the same topic.

- Outbound links. Do not publish links to FFA sites and other sites excluded

from the indexes of search engines. Doing so may lower the rating of your own

site.

- Outbound links. A page should not contain more than 50-100 outbound links.

More links will not harm your site rating but links beyond that number will not

be recognized by search engines.

- Inbound site-wide links. These are links published on every page of the

site. It is believed that search engines do not approve of such links and do not

consider them while ranking pages. Another opinion is that this is true only for

large sites with thousands of pages.

- The ideal keyword density is a frequent seo discussion topic. The real

answer is that there is no ideal keyword density. It is different for each query

and search engines calculate it dynamically for each search query. Our advice is

to analyze the first few sites in search results for a particular query. This

will allow you to evaluate the approximate optimum density for specific queries.

- Site age. Search engines prefer old sites because they are more stable.

- Site updates. Search engines prefer sites that are constantly developing.

Developing sites are those in which new information and new pages periodically

appear.

- Domain zone. Search engines prefer sites that are located in the zones .edu,

.mil, .gov, etc. Only the corresponding organizations can register such domains

so these domains are more trustworthy.

- Search engines track the percent of visitors that immediately return to

searching after they visit a site via a search result link. A large number of

immediate returns means that the content is probably not related to the

corresponding topic and the ranking of such a page gets lower.

- Search engines track how often a link is selected in search results. If

some link is only occasionally selected, it means that the page is of little

interest and the rating of such a page gets lower

- Use synonyms and derived word forms of keywords, search engines will

appreciate that (keyword stemming).

; - Search engines consider a very rapid increase in inbound links as

artificial promotion and this results in lowering of the rating. This is a

controversial topic because this method could be used to lower the rating of

one's competitors.

- Google does not take into account inbound links if they are on the same (or

similar) hosts. This is detected using host IP addresses. Pages whose IP

addresses are within the range of xxx.xxx.xxx.0 to xxx.xxx.xxx.255.

are regarded as being on the same host. This opinion is most likely to be rooted

in the fact that Google have expressed this idea in their patents. However,

Google employees claim that no limitations of IP addresses are imposed on

inbound links and there are no reasons not to believe them.

- Search engines check information about the owners of domains. Inbound links

originating from a variety of sites all belonging to one owner are regarded as

less important than normal links. This information is presented in a patent.

- Search engines prefer sites with longer term domain registrations.

6.4 Creating correct content

The content of a site plays an important role in site promotion for many

reasons. We will describe some of them in this section. We will also give you

some advice on how to populate your site with good content.

- Content uniqueness. Search engines value new information that has not been

published before. That is why you should compose own site text and not

plagiarize excessively. A site based on materials taken from other sites is much

less likely to get to the top in search engines. As a rule, original source

material is always higher in search results.

- While creating a site, remember that it is primarily created for human

visitors, not search engines. Getting visitors to visit your site is only the

first step and it is the easiest one. The truly difficult task is to make them

stay on the site and convert them into purchasers. You can only do this by using

good content that is interesting to real people.

- Try to update information on the site and add new pages on a regular basis.

Search engines value sites that are constantly developing. Also, the more useful

text your site contains, the more visitors it attracts. Write articles on the

topic of your site, publish visitors' opinions, create a forum for discussing

your project. A forum is only useful if the number of visitors is sufficient for

it to be active. Interesting and attractive content guarantees that the site

will attract interested visitors.

- A site created for people rather than search engines has a better chance of

getting into important directories such as DMOZ and others.

- An interesting site on a particular topic has much better chances to get

links, comments, reviews, etc. from other sites on this topic. Such reviews can

give you a good flow of visitors while inbound links from such resources will be

highly valued by search engines.

- As final tip�there is an old German proverb: "A shoemaker sticks to his

last" which means, "Do what you can do best.� If you can write breathtaking and

creative textual prose for your website then that is great. However, most of us

have no special talent for writing attractive text and we should rely on

professionals such as journalists and technical writers. Of course, this is an

extra expense, but it is justified in the long term.

6.5 Selecting a domain and hosting

Currently, anyone can create a page on the Internet without incurring any

expense. Also, there are companies providing free hosting services that will

publish your page in return for their entitlement to display advertising on it.

Many Internet service providers will also allow you to publish your page on

their servers if you are their client. However, all these variations have

serious drawbacks that you should seriously consider if you are creating a

commercial project.

First, and most importantly, you should obtain your own domain for the

following reasons:

- A project that does not have its own domain is regarded as a transient

project. Indeed, why should we trust a resource if its owners are not even

prepared to invest in the tiny sum required to create some sort of minimum

corporate image? It is possible to publish free materials using resources based

on free or ISP-based hosting, but any attempt to create a commercial project

without your own domain is doomed to failure.

- Your own domain allows you to choose your hosting provider. If necessary,

you can move your site to another hosting provider at any time.

Here are some useful tips for choosing a domain name.

- Try to make it easy to remember and make sure there is only one way to

pronounce and spell it.

- Domains with the extension .com are the best choice to promote

international projects in English. Domains from the zones .net, .org, .biz,

etc., are available but less preferable.

- If you want to promote a site with a national flavor, use a domain from the

corresponding national zone. Use .de � for German sites, .it � for Italian

sites, etc.

- In the case of sites containing two or more languages, you should assign a

separate domain to each language. National search engines are more likely to

appreciate such an approach than subsections for various languages located on

one site.

A domain costs $10-20 a year, depending on the particular registration

service and zone.

You should take the following factors into consideration when choosing a

hosting provider:

- Access bandwidth.

- Server uptime.

- The cost of traffic per gigabyte and the amount of prepaid traffic.

- The site is best located in the same geographical region as most of your

expected visitors.

The cost of hosting services for small projects is around $5-10 per month.

Avoid �free� offers while choosing a domain and a hosting provider. Hosting

providers sometimes offer free domains to their clients. Such domains are often

registered not to you, but to the hosting company. The hosting provider will be

the owner of the domain. This means that you will not be able to change the

hosting service of your project, or you could even be forced to buy out your own

domain at a premium price. Also, you should not register your domains via your

hosting company. This may make moving your site to another hosting company more

difficult even though you are the owner of your domain.

6.6 Changing the site address

You may need to change the address of your project. Maybe the resource was

started on a free hosting service and has developed into a more commercial

project that should have its own domain. Or maybe the owner has simply found a

better name for the project. In any case, moving to a new address can be

problematic and it is a difficult and unpleasant task to move a project to a new

address. For starters, you will have to start promoting the new address almost

from scratch. However, if the move is inevitable, you may as well make the

change as useful as possible.

Our advice is to create your new site at the new location with new and unique

content. Place highly visible links to the new resource on the old site to allow

visitors to easily navigate to your new site. Do not completely delete the old

site and its contents.

This approach will allow you to get visitors from search engines to both the

old site and the new one. At the same time, you get an opportunity to cover

additional topics and keywords, which may be more difficult within one resource.

|